Making a main field model#

Here we demonstrate how to make a simple time-independent main field model by fitting spherical harmonics to ground observatory measurements.

The principles of spherical harmonic analysis have been shown in the previous pages, so here we will take a shortcut and use utilties from ChaosMagPy

Clemens Kloss et al. (2025). ancklo/ChaosMagPy: ChaosMagPy v0.15 (v0.15). Zenodo. https://doi.org/10.5281/zenodo.16091680

We will use:

design_gauss to generate the design matrix for the inversion

synth_values to run the forward model to get predictions for the magnetic field from our model

hvPlot is used to create some fancy visualisations but this library is more complex to use! so if you are newer to the Python ecosystem, you are better off using Matplotlib for your own work.

# Install pooch (not currently in VRE)

%pip install pooch

Show code cell output

Requirement already satisfied: pooch in /opt/conda/lib/python3.11/site-packages (1.8.2)

Requirement already satisfied: platformdirs>=2.5.0 in /opt/conda/lib/python3.11/site-packages (from pooch) (4.0.0)

Requirement already satisfied: packaging>=20.0 in /opt/conda/lib/python3.11/site-packages (from pooch) (23.2)

Requirement already satisfied: requests>=2.19.0 in /opt/conda/lib/python3.11/site-packages (from pooch) (2.31.0)

Requirement already satisfied: charset-normalizer<4,>=2 in /opt/conda/lib/python3.11/site-packages (from requests>=2.19.0->pooch) (3.3.2)

Requirement already satisfied: idna<4,>=2.5 in /opt/conda/lib/python3.11/site-packages (from requests>=2.19.0->pooch) (3.6)

Requirement already satisfied: urllib3<3,>=1.21.1 in /opt/conda/lib/python3.11/site-packages (from requests>=2.19.0->pooch) (2.1.0)

Requirement already satisfied: certifi>=2017.4.17 in /opt/conda/lib/python3.11/site-packages (from requests>=2.19.0->pooch) (2024.12.14)

WARNING: Running pip as the 'root' user can result in broken permissions and conflicting behaviour with the system package manager, possibly rendering your system unusable. It is recommended to use a virtual environment instead: https://pip.pypa.io/warnings/venv. Use the --root-user-action option if you know what you are doing and want to suppress this warning.

[notice] A new release of pip is available: 25.1 -> 25.2

[notice] To update, run: pip install --upgrade pip

Note: you may need to restart the kernel to use updated packages.

import pooch

from chaosmagpy.model_utils import design_gauss, synth_values, power_spectrum

from chaosmagpy.plot_utils import plot_power_spectrum

import numpy as np

import pandas as pd

import xarray as xr

import matplotlib.pyplot as plt

import cartopy.crs as ccrs

import cartopy.feature as cfeature

import hvplot.xarray

ERROR 1: PROJ: proj_create_from_database: Open of /opt/conda/share/proj failed

Loading some data#

We’ll load a pre-prepared dataset containing annual means derived from observatory data. We just select a single year from this dataset. In reality the main field varies on shorter time scales but these annual data points are useful enough for a low resolution model.

# Download annual means dataset

# https://geomag.bgs.ac.uk/data_service/data/annual_means.shtml

oamjumpsapplied = pooch.retrieve(

"https://raw.githubusercontent.com/MagneticEarth/IAGA_SummerSchool2019/master/data/external/oamjumpsapplied.dat",

known_hash="dee77ded79cb0d86e3366a550830cad9de741322212faf9a74f3d03cb6bdd872"

)

def load_data(year=2000.5):

df = pd.read_csv(oamjumpsapplied, delim_whitespace=True)

df = df.where(df["year"] == 2000.5).dropna()

df = df.reset_index(drop=True)

# Screen out some bad data points

df = df.where(df["Z"] != 88888).dropna()

_ds = df.to_xarray()

_ds["Latitude"] = 90 - _ds["Colat"]

_ds["Longitude"] = (_ds["Long"] % 360 + 540) % 360 - 180

_ds = _ds.drop_vars("index")

return _ds

input_data = load_data(year=2000.5)

input_data

<xarray.Dataset>

Dimensions: (index: 164)

Dimensions without coordinates: index

Data variables: (12/14)

Code (index) object 'ALE' 'NAL' 'CCS' 'THL' ... 'VNA' 'SBA' 'VOS'

Colat (index) float64 7.5 11.08 12.28 12.52 ... 158.6 160.7 167.8 168.4

Long (index) float64 297.6 11.93 104.3 290.8 ... 351.8 166.8 106.9

alt(m) (index) float64 60.0 12.0 10.0 57.0 ... 0.0 40.0 10.0 3.5e+03

year (index) float64 2e+03 2e+03 2e+03 2e+03 ... 2e+03 2e+03 2e+03

D (index) float64 -67.51 0.332 14.76 -63.17 ... -12.91 155.3 -120.7

... ...

X (index) float64 1.319e+03 7.263e+03 ... -1.018e+04 -6.859e+03

Y (index) float64 -3.186e+03 42.0 746.0 ... 4.692e+03 -1.156e+04

Z (index) float64 5.555e+04 5.384e+04 ... -6.622e+04 -5.819e+04

F (index) float64 5.566e+04 5.433e+04 ... 6.716e+04 5.972e+04

Latitude (index) float64 82.5 78.92 77.72 77.48 ... -70.65 -77.85 -78.45

Longitude (index) float64 -62.35 11.93 104.3 -69.17 ... -8.25 166.8 106.9def plot_obs_measurements(ds=input_data):

return ds.hvplot.scatter(

x="Longitude", y="Latitude", c="Z",

coastline=True, projection=ccrs.PlateCarree(), global_extent=True,

clabel="Data: vertical (downwards) magnetic field (nT)"

)

plot_obs_measurements(input_data)

WARNING:param.main: geo option cannot be used with kind='scatter' plot type. Geographic plots are only supported for following plot types: ['bivariate', 'contour', 'contourf', 'dataset', 'hexbin', 'image', 'labels', 'paths', 'points', 'points', 'polygons', 'quadmesh', 'rgb', 'vectorfield']

/opt/conda/lib/python3.11/site-packages/xarray/core/utils.py:494: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

warnings.warn(

Building a model#

Suppose we have data,

Note that:

A least-squares solution to

# Identify coordinates and measurements

theta = input_data["Colat"]

phi = input_data["Long"]

radius = 6371.2 + input_data["alt(m)"]/1e3

B_radius = -input_data["Z"]

B_theta = -input_data["X"]

B_phi = input_data["Y"]

# Build the design matrix

N_max = 6

G_radius, G_theta, G_phi = design_gauss(radius, theta, phi, nmax=N_max, source="internal")

# Perform the inversion

G = np.concatenate((G_radius, G_theta, G_phi))

d = np.concatenate((B_radius, B_theta, B_phi))

m = np.linalg.inv(G.T @ G) @ (G.T @ d)

m

array([-2.98706210e+04, -1.72878664e+03, 5.10437604e+03, -2.36677354e+03,

3.21808504e+03, -2.60186018e+03, 1.81146058e+03, -4.40900880e+02,

1.20096209e+03, -2.28721723e+03, -2.58558551e+02, 1.28174523e+03,

2.26792327e+02, 7.08225964e+02, -5.55967072e+02, 8.56002210e+02,

8.54262291e+02, 1.88225598e+02, 2.21210848e+02, -1.75262151e+02,

-3.56256647e+02, 2.20607631e+02, 1.12133919e+02, -3.04804983e+02,

-2.21046651e+02, 1.77423732e+02, -2.70829977e+01, 2.26917637e+02,

1.30066476e+02, -1.93969550e+01, -1.25596377e+02, -1.97358431e+02,

5.34487565e+00, 5.21247100e+01, 1.36761971e+02, 8.68218607e+01,

-1.15948032e+01, -1.82150875e+02, 3.19243839e+02, -5.68244622e+01,

-7.25136804e+01, 1.65716700e+02, -6.60842751e+01, -5.59273212e+01,

-9.38254025e+00, 2.39803027e+01, -1.40908041e+02, 1.34255677e+01])

Since we do not have many data, we would need to be more sophisticated with the inversion procedure (e.g. including regularisation) to get a model of higher resolution. For this reason, the model is truncated at spherical harmonic degree 6. You can explore increasing this limit.

Exercise#

What is the shape of the design matrix?

What is the amplitude of the axial dipole (

How good is our model?#

Let’s evaluate the model over the Earth and plot contours…

def build_model(ds=input_data, nmax=10):

"""Returns Gauss coefficients for a simple SH model"""

theta = ds["Colat"]

phi = ds["Long"]

radius = 6371.2 + ds["alt(m)"]/1e3

B_radius = -ds["Z"]

B_theta = -ds["X"]

B_phi = ds["Y"]

# Build the design matrix

G_radius, G_theta, G_phi = design_gauss(radius, theta, phi, nmax=nmax, source="internal")

# Perform the inversion

G = np.concatenate((G_radius, G_theta, G_phi))

d = np.concatenate((B_radius, B_theta, B_phi))

m = np.linalg.inv(G.T @ G) @ (G.T @ d)

return m

def sample_model_on_grid(m, radius=6371.200):

"""Evaluate Gauss coefficients over a regular grid over Earth"""

theta = np.arange(1, 180, 1)

phi = np.arange(-180, 180, 1)

theta, phi = np.meshgrid(theta, phi)

B_r, B_t, B_p = synth_values(m, radius, theta, phi)

ds_grid = xr.Dataset(

data_vars={"B_NEC_model": (("y", "x", "NEC"), np.stack((-B_t, B_p, -B_r), axis=2))},

coords={"Latitude": (("y", "x"), 90-theta), "Longitude": (("y", "x"), phi), "NEC": np.array(["N", "E", "C"])}

)

return ds_grid

def plot_model_contours(m):

ds_grid = sample_model_on_grid(m)

# Generate contour plot of vertical component

return ds_grid.sel(NEC="C").hvplot.contourf(

x="Longitude", y="Latitude", c="B_NEC_model",

levels=30, coastline=True, projection=ccrs.PlateCarree(), global_extent=True,

clabel="Model: vertical (downwards) magnetic field (nT)"

)

m = build_model(input_data, nmax=6)

plot_model_contours(m)

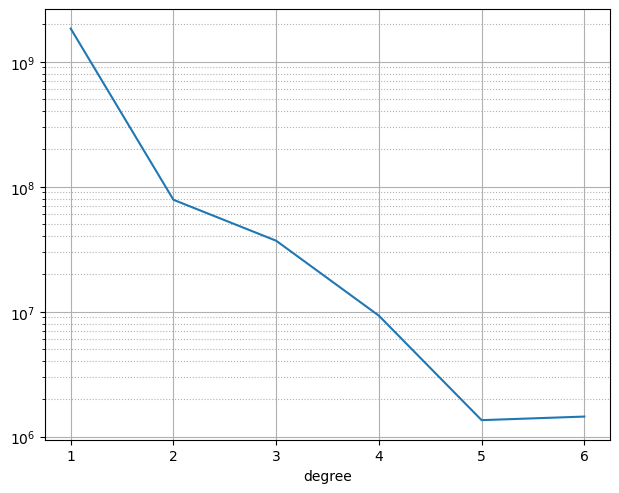

The power spectrum is a common way to assess and compare models, showing how much power is concentrated at each spherical harmonic degree. We can use plot_power_spectrum from ChaosMagPy:

plot_power_spectrum(power_spectrum(m));

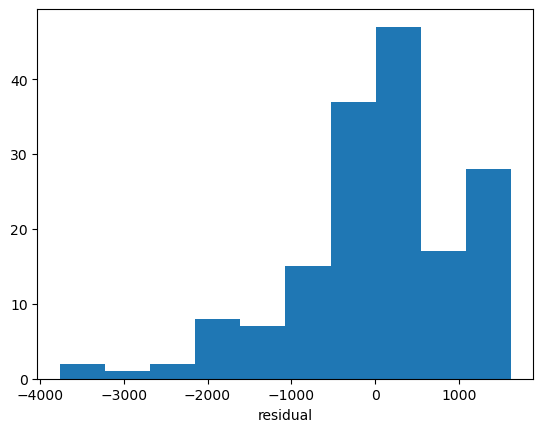

… and we can look at the residuals between the input data and the model:

def append_residuals(ds=input_data, m=m):

# Evaluate model at the data locations

B_r, B_t, B_p = synth_values(m, 6371.2 + ds["alt(m)"]/1e3, ds["Colat"], ds["Longitude"])

# Assign data-model residuals to the dataset

ds["res_N"] = ds["X"] - (-B_t)

ds["res_E"] = ds["Y"] - (B_p)

ds["res_C"] = ds["Z"] - (-B_r)

return ds

ds = append_residuals(input_data, m)

plt.hist(ds["res_C"])

plt.xlabel("residual");

ds.hvplot.scatter(

x="Longitude", y="Latitude", c="res_C",

coastline=True, projection=ccrs.PlateCarree(), global_extent=True,

clabel="Data-model residual: vertical (downwards) magnetic field (nT)"

)

WARNING:param.main: geo option cannot be used with kind='scatter' plot type. Geographic plots are only supported for following plot types: ['bivariate', 'contour', 'contourf', 'dataset', 'hexbin', 'image', 'labels', 'paths', 'points', 'points', 'polygons', 'quadmesh', 'rgb', 'vectorfield']

/opt/conda/lib/python3.11/site-packages/xarray/core/utils.py:494: FutureWarning: The return type of `Dataset.dims` will be changed to return a set of dimension names in future, in order to be more consistent with `DataArray.dims`. To access a mapping from dimension names to lengths, please use `Dataset.sizes`.

warnings.warn(

Exercise#

Calculate the RMS misfit for each vector component (Hint: we have stored the residuals in the dataset, accessible as

ds["res_N"],ds["res_E"],ds["res_C"])Experiment with changing the SH degree trunction in the model

Experiment with using data just over Europe

Add random noise into the data to observe the effect

Use viresclient to access the monthly means dataset and produce a model using those instead

def select_europe(ds=input_data):

"""Subselect the dataset down to just cover Europe"""

colat_minmax = (20, 60)

lon_minmax = (-10, 40)

ds = ds.where(

(ds["Colat"] > colat_minmax[0]) & (ds["Colat"] < colat_minmax[1]) &

(ds["Long"] > lon_minmax[0]) & (ds["Long"] < lon_minmax[1]),

).dropna(dim="index")

return ds